Member-only story

KNN and Naive Bayes In SHORT

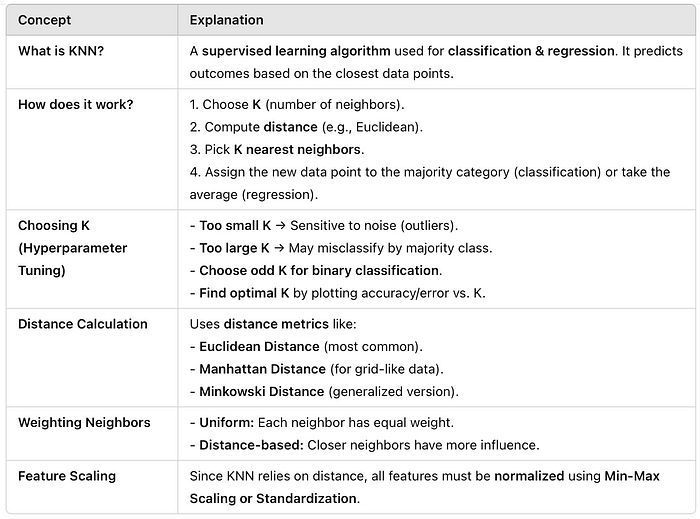

In this chapter, we will explore K-Nearest Neighbors (KNN), a simple yet powerful algorithm used for classification and regression tasks. Additionally, we will study KNN Imputation, a technique that leverages the KNN algorithm to handle missing data by estimating values based on the nearest neighbors. Alongside this, we will also dive into Naive Bayes, a probabilistic classifier based on Bayes’ theorem, which is widely used for text classification, spam detection, and other applications.

K Nearest Neighbour (KNN)

Example: KNN for FD Investment Prediction

- A bank wants to predict whether a new customer will invest in a Fixed Deposit (FD) based on past customer data. The features used are Age and Annual Income.

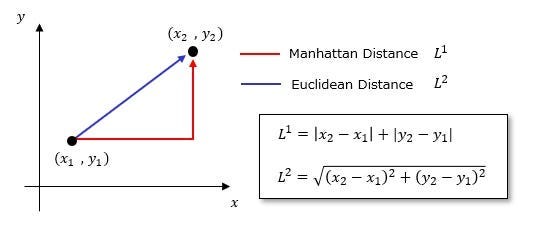

- We have a dataset of past customers where we know whether they invested in an FD or not. When a new customer comes in (e.g., Age = 30, Income = 4 Lakhs), we calculate the distance between this new customer and existing customers using Euclidean Distance.

- For K = 3, we identify the three closest customers. If the majority of these neighbors invested in an FD, we predict that the…